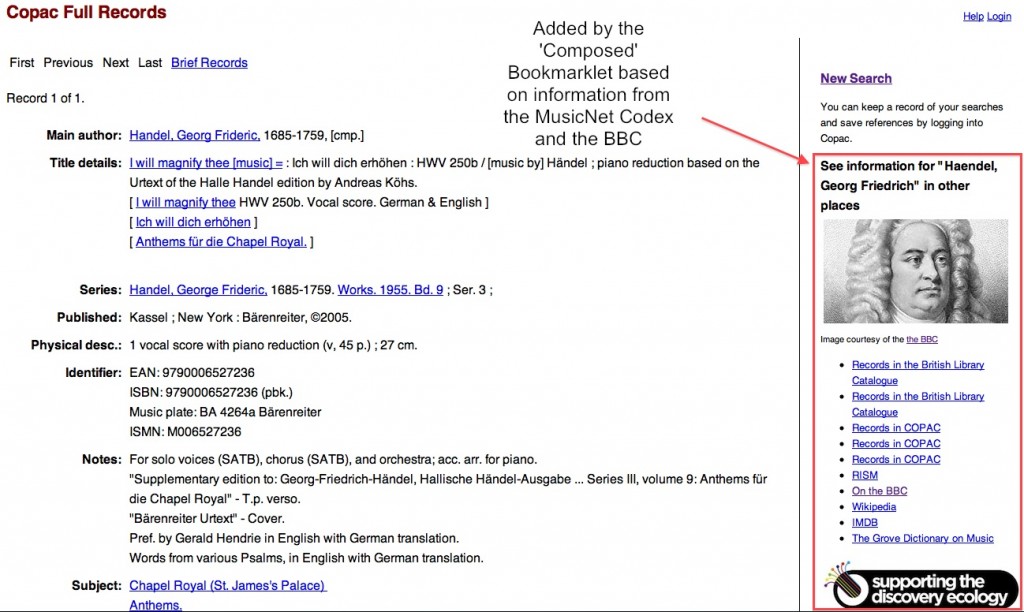

‘Composed’ is my entry for the UK Discovery #discodev developer competition. Composed helps you link between information about composers of classical music by exploiting the MusicNet Codex.

Specifically currently Composed enables linking from COPAC catalogue records mentioning a composer, to other information and records about the composer.

What is it and how do I install it?

Composed is a Bookmarklet which you can install by dragging this link to your browser bookmark bar: Composed.

How do I use it?

If you haven’t already, drag this Composed bookmarklet to your browser bookmark bar (or otherwise add to your bookmarks). Next find a record on COPAC which mentions a classical composer – such as this one, and once you are viewing it in your browser, click the bookmarklet.

Assuming it is all working, you should find that the display is enhanced by the addition of some links on the right hand side of the screen. If you’ve used the example given above you should see:

- An image of the composer (Handel) [UPDATE 5th August 2011: See my comment below about problems with displaying images]

- Links to:

- Records in the British Library catalogue

- More records in COPAC

- The entry for Handel in the Grove Dictionary of Music (subscribers only)

- Record in RISM (a music and music literature database)

- A page about Handel on the BBC

- A page about Handel in the IMDB

- The Wikipedia page for Handel

These are based on the information available for Handel from the MusicNet Codex and the BBC.

If the record you are looking at contains multiple composers, you should see multiple entries in the display. If there are no links available for the record you are viewing, you should see a message to this effect.

How does it work?

The mechanics are pretty simple. The bookmarklet itself is a simple piece of javascript, which calls another script. This script finds the COPAC Record ID from the COPAC record currently in the browser. This ID is passed to some php which uses a database I built specifically for this purpose to match a COPAC record ID to one or more MusicNet Codex URIs. For each MusicNet Codex URI retrieved, the script requests information from the URI, gets back some RDF, from which it extracts the links that are passed back to the javascript, which inserts them into the COPAC display. If the MusicNet RDF contains a link to the BBC, further RDF is grabbed from the BBC and the relevant information is added into the data passed back to the display.

So what were the challenges?

Challenge 1

Manipulating RDF. Although I’ve done quite a bit of work with RDF in one form or another, I’ve never actually written scripts that consume it – so this was new to me. I ended up using php because the Graphite RDF parser, written by Chris Gutteridge at the University of Southampton made it so easy to retrieve the RDF and grab the information I needed – although it took me a little while to get my head around navigating a graph rather than a tree (being pretty used to parsing xml).

So I guess I owe Chris a pint for that one 🙂

Challenge 2

The major challenge was getting a list of COPAC record IDs which mapped to MusicNet Codex URIs. Actually – I wasn’t able to do this and what I have is an approximation – almost certainly you can find examples where the bookmarklet populates the screen with a composer when there is no composer mentioned in the record.

Unfortunately MusicNet is unable to point at a COPAC identifier or URI for a person – like many library catalogues, COPAC identifies items in libraries (or perhaps more accurately, the records that describe these items), but not any of the entities (people, places, etc.) within the record. This means that while MusicNet can point at the a specific URI for the BBC that represents (for example) ‘Handel’, with COPAC all it does is give a URL which triggers a search which should bring back a set of records all of which mention Handel.

There is a whole load of background as to how MusicNet got to this point, and how they build the searches to COPAC – but essentially it is based on the text strings in COPAC that were found by the MusicNet project to refer to the same composer. These text strings are what are used to build the search against COPAC. This is also the explanation as to why you sometimes see multiple links to COPAC/the British Library catalogue in the Composed bookmarklet display – because there are multiple strings that MusicNet found represent the same composer.

What I’ve done to create a rough mapping between MusicNet and COPAC records is to run each search that MusicNet defines for COPAC and grab all the record ids in the resultant record set. This gives a rough and ready mapping, but there are bound to be plenty of errors in there. For example one of the searches MusicNet holds for the composer Franz Schubert on the British Library catalogue is http://catalogue.bl.uk/F/?func=find-b&request=Schubert&find_code=WNA – which will actually find everything by anyone called ‘Schubert’ – if there are any similar searches in the COPAC data I’ll be grabbing a lot of irrelevant records in my searching. Since the number of searches, and resultant records, is relatively high (e.g. over 30k records mention Mozart), at the time of writing I’m still in the process of populating my mapping – it is currently listing around 50k [Update: 31/7/2011 at 15:33 – final total is 601,286] COPAC IDs, but I’ll add more as my searches run and produce results in the background.

I’m talking to the MusicNet team to see if they are able at this stage to track back to the original COPAC records they used to derive their data, and so we could get an exact mapping of their URIs to lists of record IDs on COPAC – this would be incredibly useful and allow functions such as mine to work much more reliably.

None of this should be seen as a criticism of either the MusicNet or COPAC teams – without these sources I wouldn’t have even been able to get started on this!

Final Thoughts

I hope this shows how data linked across multiple sources can bring together information that would otherwise be separated. There is no reason in theory why the bookmarklet shouldn’t be expanded to take in the other data sources MusicNet knows about – and possibly beyond (as long as there is access to and ID that can finally be brought back to MusicNet).

Libraries desperately need to move beyond ‘the record’ as the way they think about their data – and start adding the identifiers they already have available to their data – this would make this type of task much easier.

If you want to build other functionality on my rough and ready MusicNet to COPAC record mapping, you can pass COPAC IDs to the script:

http://www.meanboyfriend.com/composed/composed.php?copacid=<copac_record_id>

You’ll get back some JSON containing information about one or more composers with a Name, Links, and an Image if the BBC have a record of an image in their data.