Some slightly sketchy notes of Paul Walk’s talk

Paul says: the real challenges are around:

Business case

IPR

etc.

Technical issues not trivial, but insignificant compared to other challenges

We aren’t building a system here – but thinking about an environment … although probably will need to build systems on top of this at some point

‘The purple triangle of interoperability’!:

- Shared Principles

- Technical Standards

- Community/Domain Conventions

Standards are not the whole story

- Use (open) technical standards

- Require standards only where necessary

- Avoid pushing standards to create adoption

- Establish and understand high-level principles and ‘explain the working out’ – support deeper understanding

Paul suggests some ‘safe bets’ in terms of approaches/principles:

- Use Resource Oriented Architecture

- identify persistently – global and public identities to your high-order entities (e.g. metadata records, actual resources)

- URLs (or http URIs) is a sensible default for us (although not the only game in town)

- use HTTP and REST

Aggregation is a corner-stone of RDTF vision – so make your resources a target for aggregation:

- use persistent identifiers for everything

- adopt appropriate licensing

- ‘Share alike’ maybe easier than ‘attribution’

Paul still a little sceptical of ‘Linked Data’ – it’s been the future for a long time. Tools for Linked Data still not good enough – can be real challenge for developers. However, we should be a

Quote Tom Coates: “Build for normal users, developers and machines” – and if possible, build the same interface for all three [hint, a simple dump of RDF data isn’t going to achieve this!]

Expect and enable users to filter – give them ‘feeds’ (e.g. RSS/Atom) – concentrate on making your resources available

Paul sees slight risk we embrace ‘open at the expense of ‘usability’ – being open is an important first step – but need to invest in making things useful and usable

Developer friendly formats:

- XML has a lot going for it, but also quite a few issues

- well understood

- lots of tools available

- validation is a pain

- very verbose

- not everything is a tree

- JSON has gained rapid adoption

- less verbose – simple

- enables client side manipulation

Character encodings – huge number of XML records from UK IRs are invalid do to character encoding issues

Technical Foundations:

- Work going on now – Will be a website ETA June 2011

- JISC Observatory will gather evidence of ‘good use’ of technical standards etc

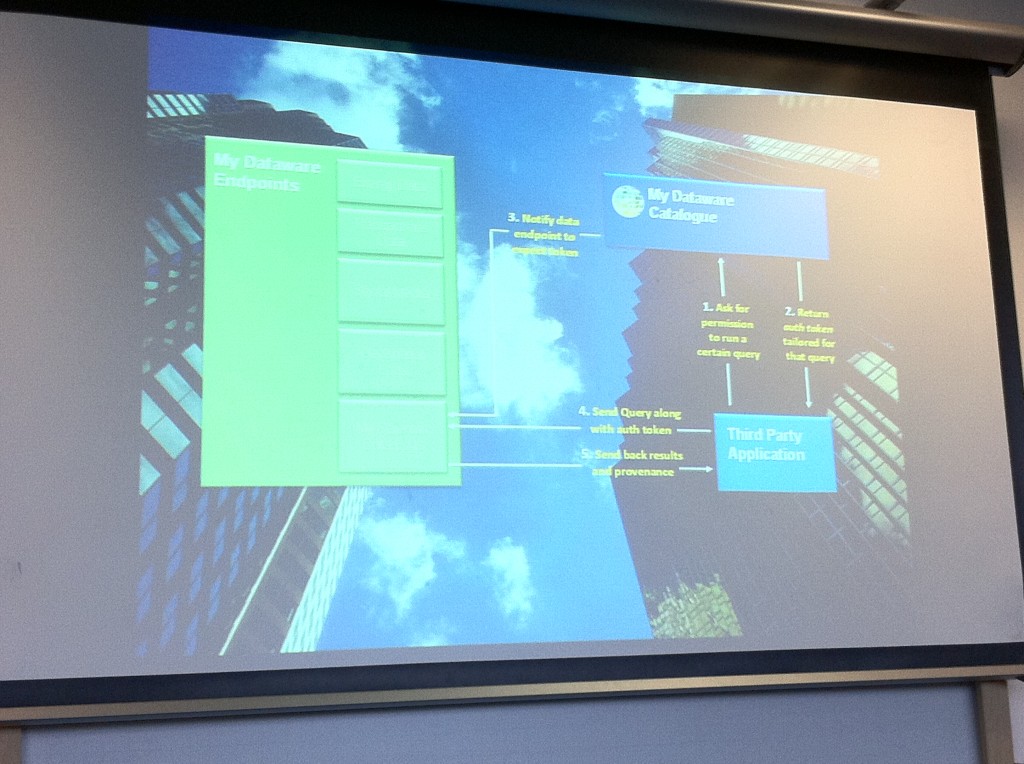

- Need to understand federated aggregation better

Questions for data providers:Do you want to provide a data service, or just provide data?